AI and Global Power Consumption: Trends and Projections

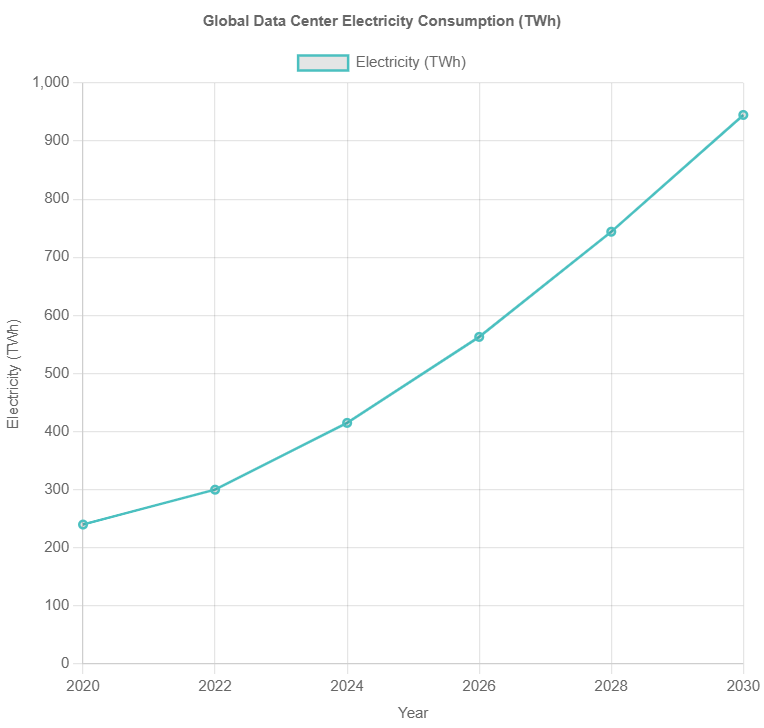

In recent years, the explosive growth of artificial intelligence (AI) has become a major driver of rising electricity demand worldwide. Data centers – the backbone of AI training and inference – consumed roughly 415 TWh in 2024 (about 1.5% of global electricity) and have been growing ~12% per year since 2017. The International Energy Agency (IEA) projects that data center demand will more than double to ~945 TWh by 2030, driven primarily by AI workloads. For example, in the United States data centers are expected to consume more power by 2030 than all energy‑intensive manufacturing combined. IDC similarly estimates global data center energy will grow from ~352 TWh in 2023 to ~857 TWh by 2028 (≈19.5% CAGR). Even so, data centers still account for only a small share of total electricity now, but their local impact is significant (nearly half of US data center capacity is concentrated in just five regions). The chart below illustrates historical and projected global data center electricity use:

Beyond data centers, other sectors are also adopting AI in ways that affect power use. Edge and consumer devices (smartphones, smart speakers, etc.) now include on‑device AI chips, but ML processing remains a small fraction of their energy use. One analysis found that machine learning (ML) tasks consume <3% of a smartphone’s energy on average. Industrial applications – such as robotics and factory automation – can reduce energy per task, but AI-driven systems (e.g. smart robots, complex vision systems, IoT analytics) still add to facility power use. For instance, modern industrial robots often include energy-saving features (regenerative braking, on-demand power modes) that cut consumption compared to older lines, but AI controls and sensors increase the overall electrical load. In infrastructure and transport, AI is used for efficiency (smart grids, optimized traffic flows), which can reduce energy use. In each sector, however, AI’s net effect depends on balancing its power needs against efficiency gains.

Data Center Energy Growth

Data centers form the core of AI’s electricity footprint. Recent data and projections underscore this impact. In North America alone, data center power capacity climbed from ~2,688 MW in 2022 to 5,341 MW in 2023 – a jump largely driven by new AI-focused facilities. Globally, data centers consumed about 460 TWh in 2022 (roughly the same as Saudi Arabia or France individually). By 2026, consumption is expected to reach ~1,050 TWh – roughly the energy demand of Japan today. According to the IEA, by 2030 data centers will require more electricity than current manufacturing of all heavy industries combined, with AI workloads “the most important driver” of this surge.

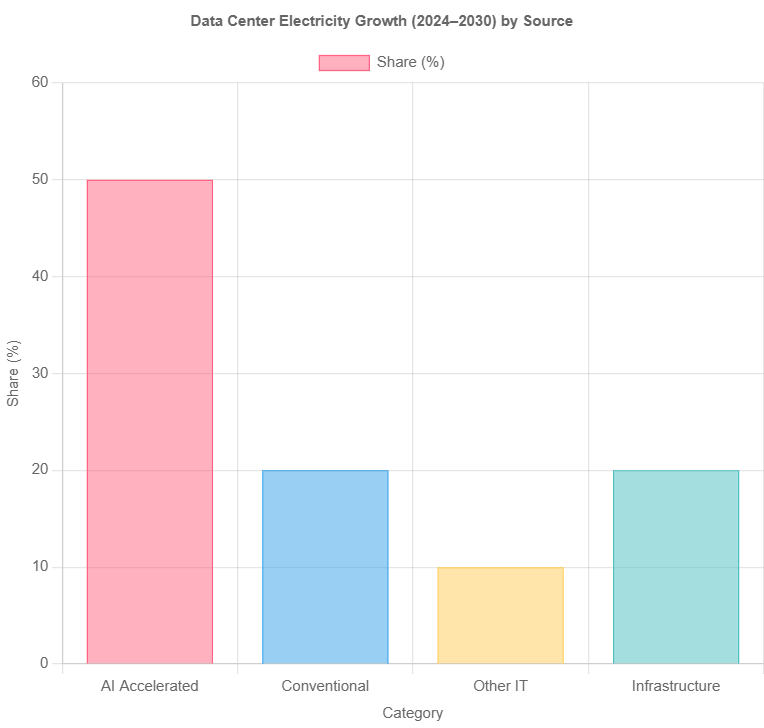

AI-specific hardware is intensifying this growth. “AI-focused” data centers – filled with GPUs and TPUs for deep learning – can consume 7–8× the power of a typical server cluster. The IEA reports that accelerated servers (i.e. GPU/AI servers) are growing at ~30% per year, versus only ~9% for conventional servers. As a result, accelerated servers account for roughly half of the net increase in data center electricity by 2030, with the remainder split among standard servers, storage, networking, and cooling infrastructure. In advanced economies, data centers are projected to contribute over 20% of total electricity demand growth by 2030. This rapid expansion has prompted some regions to curb or regulate data center construction: for example, Ireland at one point paused new grid‑tied data centers as they already used ~20% of national power.

Mermaid chart – Data Center Energy Growth by Component (2024–2030): accelerated (AI) vs conventional servers, etc.

The chart above (derived from IEA data) shows that approximately half of the projected increase in data center power (2024–2030) will come from AI-accelerated servers. The rest is largely conventional server demand (~20%), with smaller shares for ancillary IT equipment and facility infrastructure.

Beyond corporate data centers, public cloud providers are moving aggressively. For instance, Microsoft and Google have reported steep rises in emissions tied to data center growth: Microsoft’s data center emissions grew ~30% since 2020, and Google’s grew ~50% since 2019, both largely due to AI-driven expansions. At the same time, these companies are investing in renewables and efficiency: the IEA notes about half of the new data center power demand through 2030 is expected to be met by renewable energy, and hyperscale operators continually improve cooling and PUE (power usage effectiveness) to offset some growth.

AI Model Energy Metrics

Training and using AI models consumes substantial energy. Training a large model is especially intensive, though it is a one-time cost per model version. For example, the training of OpenAI’s GPT-3 (175 billion parameters) required an estimated 1,287 MWh of electricity (over 1.2 GWh). By comparison, streaming one hour of Netflix uses ~0.0008 MWh, so training GPT-3 used about as much power as 1.3 million hours of video streaming. (Training GPT-4 is rumored to take ~50× more energy than GPT-3, which would imply on the order of 64,000 MWh if that factor is accurate.) Smaller NLP models also consume megawatt-hours: for instance, training BERT-base (~110M parameters) used on the order of 1.5 MWh.

Once trained, inference (running the model on new inputs) is less intensive per operation but occurs continuously at scale. A single query to GPT-3’s model costs about 0.0003 kWh (0.3 Wh). Although tiny per query, with millions of users the total can add up. By one estimate, a ChatGPT query uses roughly 5× more electricity than a typical web search. Image-generating AI is more costly: generating one AI image (e.g. from Stable Diffusion/DALL·E) was measured to use about 0.0029 kWh (2.9 Wh) – nearly the same as charging a smartphone (≈0.012 kWh) when considered per image generated. Language tasks like classification or QA consume much less: one study found ~0.047 kWh per 1,000 text-generation inferences (or 0.000047 kWh per inference). In general, text-generation models are more efficient than image/video models on a per-query basis.

These numbers illustrate that AI’s energy cost is a function of model size and usage. As models scale up (more parameters, more layers, more data), training costs grow steeply. A graph from OpenAI (2018) showed compute demand for cutting-edge models doubling ~every 3–4 months. However, hardware and software innovations are somewhat blunting this trend. Specialized AI accelerators (GPUs, TPUs, NPUs) offer greater performance per watt than general-purpose CPUs. For example, Google’s TPUv3 hardware can train some models much more efficiently than GPUs. One report notes that Google trained a model “seven times more powerful than GPT-3” using only one-third of the energy compared to a similar GPU-based training. Moreover, techniques like quantization, pruning, and distillation shrink models without sacrificing accuracy. For instance, distilling a large model into a smaller one can cut inference energy by ~50–60%. Thus, continued efficiency gains may partially offset raw energy growth – though it remains unclear if they will keep pace with model scaling.

AI vs Traditional Workloads

Not all data center workloads grow equally. Traditional IT services (web hosting, databases, enterprise software) are rising, but AI workloads are by far the fastest-growing slice of demand. Training a typical AI model can be 7–8× more power-hungry than a standard batch computing task. The growth of AI workloads is already visible in procurement and infrastructure. For example, shipments of data center GPUs (predominantly NVIDIA) jumped ~44% from 2022 to 2023. Many new data centers are being designed around high-density GPU clusters with liquid cooling and stronger power feeds, rather than the lower-density layouts of traditional servers.

This shift has consequences for infrastructure. In conventional servers, energy use is spread among CPUs, storage, and networking, whereas AI clusters are GPU-dominated. Because GPUs (and TPUs) have much higher power draw per chip, they drive local peaks in electricity and cooling demand. Globally, the share of data center energy from accelerators has been rising: IEA analysis suggests accelerated servers could consume half of future data center power by 2030. By contrast, regular CPU-only servers are growing slowly and becoming a smaller share of the total. In other words, the mix of IT hardware in data centers is shifting toward more power-intensive components as AI grows.

Even so, it’s worth noting that not all electricity in a data center is used for computation. Cooling and infrastructure consume a significant fraction. A modern hyperscale data center typically has a PUE (power usage effectiveness) around 1.1–1.2, meaning ~10–20% of power goes to cooling and facility overhead. The IEA estimates that about 60% of a typical data center’s electricity runs the servers themselves, with the rest for cooling, UPS, and other systems. Hyperscalers continually refine cooling – e.g. using outside air or liquid cooling – to reduce this overhead, especially as rack densities climb above 30–50 kW per rack. (Liquid cooling can cut the power needed for cooling by up to 90% compared to traditional air-cooling in ultra-dense racks.)

Energy in Edge Devices and Industry

While data centers dominate AI’s electricity use today, smaller-scale AI on edge devices is growing. Smartphones, cameras, and IoT devices increasingly run neural networks locally (image enhancement, voice assistants, etc.). Despite this, ML still represents a tiny share of total device power: one study found ML tasks account for <3% of a phone’s energy (with most usage still from screen, radio, apps). Even collectively, billions of phones running occasional AI features consume far less energy than the server farms powering them.

In industrial settings, AI can both add and save power. Factories using AI-driven optimization may run more efficiently overall (e.g. predictive maintenance reduces waste, AI vision minimizes scrap), but the AI systems themselves – sensors, vision systems, robot controllers – draw extra current. Modern industrial robots, for example, often regenerate braking energy and throttle power during idle time, saving energy compared to older models. AI also powers advanced manufacturing (like semiconductor fabs) where machines run 24/7 at high electricity densities. The net impact in industry is sector-specific: many studies show AI can reduce energy intensity per unit output, but absolute usage can rise as companies run more automated capacity.

Healthcare, transportation, and other sectors likewise see mixed effects. Self-driving vehicles use substantial onboard compute (up to hundreds of watts per car for sensors and chips) but may cut system-wide fuel use. Data-driven energy systems (smart grids, AI-managed data centers) promise efficiency gains, yet they require datacenter computing. In sum, AI is increasingly embedded “everywhere,” so its global energy footprint spans all sectors – from consumer gadgets to factories – even as data centers remain the largest locus.

Hardware and Software Efficiency Factors

The rate of AI’s energy growth depends critically on hardware and software innovations. On the hardware side, accelerator chips (GPUs, TPUs, specialized ASICs) have become the norm for AI because they deliver much higher TOPS/Watt than general-purpose CPUs. Leading-edge chips (5nm and below processes) also improve energy per operation. Cloud providers custom-design data center infrastructure (electricity feeds, cooling) to maximize GPU uptime. Meanwhile, hardware utilization (how much of the time machines are doing useful work) and power provisioning (avoiding overhead like idle rack fans) are carefully tuned.

Software and algorithmic factors are equally important. Large models are being compressed via pruning, low-precision math, and distillation. For example, distilling a model can cut its size by up to 90% and reduce inference energy by ~50–60%. Research on energy-aware model design (efficient transformers, binary quantization, sparse layers) aims to keep performance while lowering computation. Additionally, smarter scheduling – such as batching inference queries or using lower-power chips during low demand – can shave operational energy. The collective impact is that efficiency gains continue, but they are racing against a backdrop of ever-larger models and more use cases.

Another factor is the power density of AI hardware. GPUs and TPUs run at high wattage (300W+ per chip). Packing dozens of such chips in a rack yields very high heat flux, requiring advanced cooling. This contrasts with traditional server racks (10–15 kW). High density improves compute per square meter but raises infrastructure energy for cooling. The industry is experimenting with liquid immersion cooling and advanced air-flow designs to handle this.

Finally, there are system-level factors: modern data centers shift computation to times and places with cleaner energy. Google, for instance, matches its compute to renewable supply by timing workloads, reducing carbon. The IEA notes half of new data center electricity by 2030 is expected from renewables. On the other hand, the electricity grid mix matters: training or serving an AI model in a coal-dependent region generates much more CO₂ than in a high-renewable grid.

Future Outlook and Projections

Looking ahead, all credible forecasts point to substantially higher AI-related power use. The IEA’s base scenario has global data center demand hitting 945 TWh by 2030 (up from 415 TWh in 2024), and potentially ~1,200 TWh by 2035. Deeper into the future, uncertainties widen: accelerated adoption could push above 1,000 TWh by mid-2030s, while rapid efficiency could moderate growth. In any case, data centers will likely consume a few percent of global electricity in the 2030s (the IEA notes ~3% by 2030).

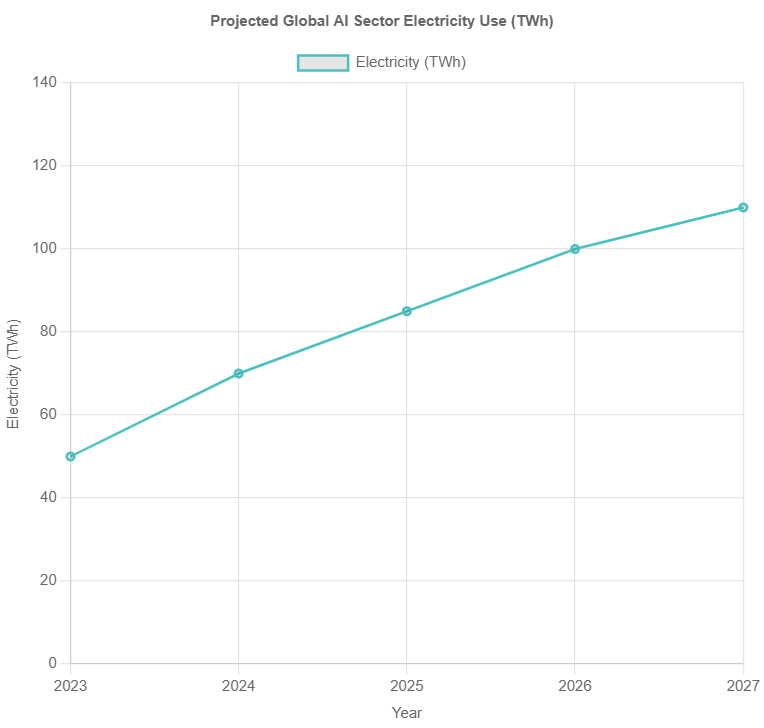

Projections for AI-specific consumption echo this trend. One analysis predicts the AI sector’s power draw could reach 85–134 TWh per year by 2027 (about 0.5% of global electricity). Another estimate is “about half a percent of global electricity” by 2027. Some industry observers have forecast generative AI alone might account for ~1.5% of world power use by 2029 (Dr. Alex de Vries, NVIDIA-based model) though these are scenario-dependent. Regardless, the consensus is that AI’s power share will move from imperceptible to significant: already it’s comparable to the energy demands of mid-sized countries (e.g. by 2026, data centers could rival Sweden or Germany).

Chart – Projected Global AI Sector Electricity Use:

These projections have sparked policy and industry responses. Some governments (e.g. Singapore, parts of Europe) are introducing data center efficiency or sizing regulations. Tech firms are pledging 24/7 carbon-free energy and investing in grid-scale renewables. Others advocate for “energy ratings” on AI models or more transparency on their power needs.

In summary, the last five years have seen AI’s electricity demand skyrocket alongside its capabilities. Data center power use has been growing at >10% annually, heavily fueled by AI. Training and running large models like GPT and DALL·E involve gigawatt-hours of power (and even larger carbon footprints if the grid is carbon-intensive). Unless countered by efficiency gains or green energy, this trend will continue. By 2030, AI-driven workloads are likely to account for several percent of global electricity use. The stakes are high: powering AI securely and sustainably will require both technological innovation and careful policy planning to balance benefits with environmental and grid impacts.

Key points: Globally, data center electricity is projected to double by 2030 (to ~945 TWh), with AI workloads as the primary growth engine. Training a top model (e.g. GPT-3) can use >1 GWh of power, and running these models at scale (inference) further increases demand. Hardware shifts (GPUs/TPUs) and efficiency improvements (model compression, renewables) shape the trend. Overall, AI’s share of power use is climbing into the low-percent range of global electricity, raising concerns about grids, emissions, and cooling needs, especially given AI’s cross-sector expansion.

Sources: Authoritative data and analyses from the IEA, MIT (CSAIL), industry reports (IDC, Deloitte), and recent research were used to compile this report. These document the trends, model-level energy metrics, and forecasts discussed above.